About me

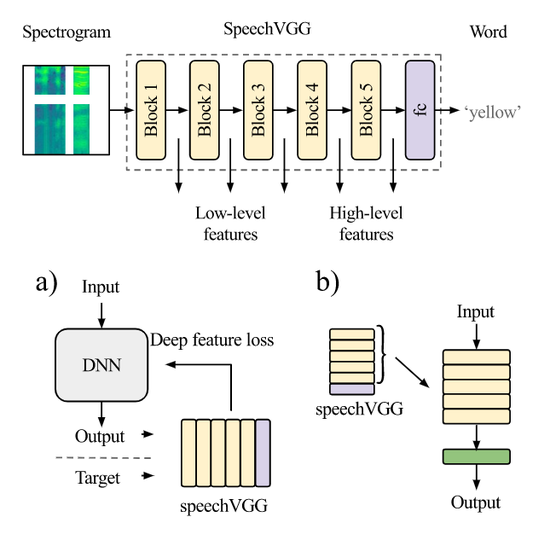

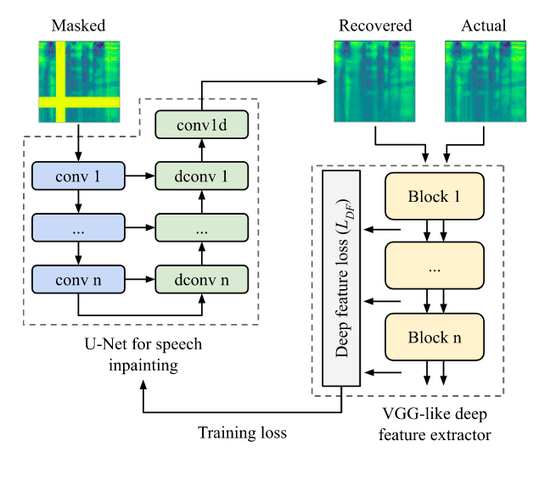

I am an Audio Machine Learning Scientist at Bose Corporation. My current research is centered around the development of novel ML-based methods for lightweight, on-device speech and audio signal processing, with a particular focus on speech enhancement and hearing augmentation.

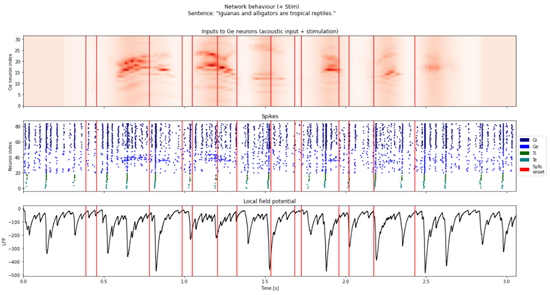

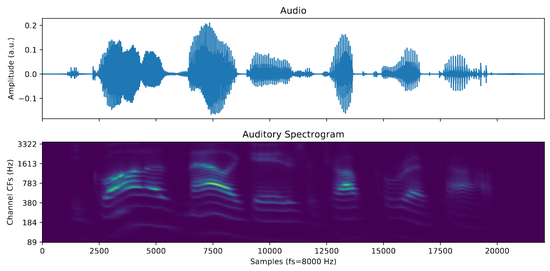

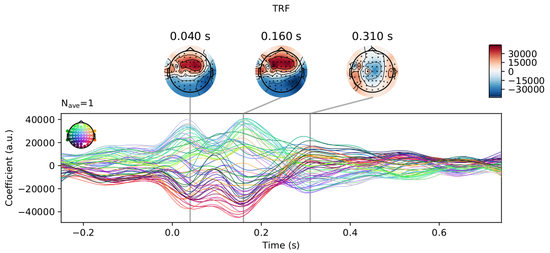

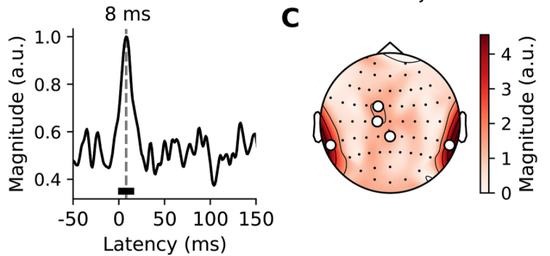

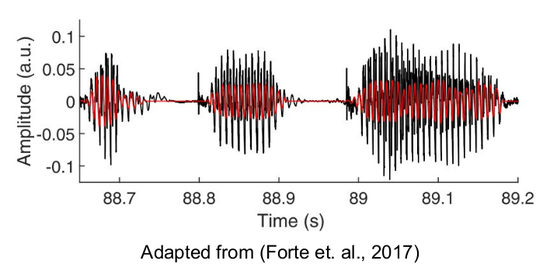

Before, I was a PhD student in the Department of Bioengineering & Centre for Neurotechnology at Imperial College London (ICL). As a member of the Sensory Neuroengineering lab led by Prof. Tobias Reichenbach, my research focused on understanding neural mechanisms underlying perception and comprehension of natural speech, especially in challenging listening conditions. In my work, I combined computational modelling with neuroimaging and non-invasive brain stimulation to understand how natural speech is processed across human auditory pathways.

In addition to my PhD research, I also worked as an Applied Scientist Intern at Amazon - Lab 126, a Scientific Advisor at Logitech and a Consultant for clinical data analysis at INBRAIN Neuroelectronics.

For more details, see my CV, explore this website or get in touch!

Interests

- Machine Learning

- Speech Signal Processing

- (Bio)Signal Processing

- Computational Neuroscience

- Auditory Cognitive Neuroscience

Education

-

PhD in Neurotechnology, 2022

Imperial College London, UK

-

MRes in Neurotechnology, 2018

Imperial College London, UK

-

MSc in Biomedical Engineering, 2017

Imperial College London, UK

-

BEng in Biomedical Engineering, 2016

Warsaw University of Technology, Poland